TECHNOLOGY

Deepfake Technology: The Complete Human Guide to Understanding, Using, and Protecting Yourself From AI-Generated Media

Deepfake technology used to sound like something ripped straight from a sci-fi movie. Today, it’s sitting quietly in our social feeds, customer service calls, marketing campaigns, and unfortunately, in scams and misinformation too. If you’ve ever watched a video and thought, “That looks real… but something feels off,” there’s a good chance you were looking at a deepfake.

In its simplest form, deepfake technology uses artificial intelligence to create realistic-looking or realistic-sounding fake media. But the implications go far beyond novelty videos or celebrity face swaps. Deepfakes are changing how we think about trust, identity, creativity, security, and even truth itself.

In this guide, I’ll walk you through deepfake technology the way a human expert would explain it over coffee — clearly, honestly, and without hype. You’ll learn how it works, where it’s genuinely useful, where it’s dangerous, how to spot it, what tools exist, and how businesses, creators, and everyday people can use it responsibly.

Whether you’re a content creator, marketer, educator, business owner, or just someone trying to stay informed, this article will give you a real-world understanding you can actually use.

What is deepfake technology, really?

Deepfake technology is a form of synthetic media created using artificial intelligence, primarily deep learning models, to manipulate or generate audio, video, or images that convincingly imitate real people.

The word “deepfake” comes from two parts: “deep learning,” which refers to neural networks trained on massive datasets, and “fake,” which describes the synthetic output. But here’s the important nuance: not all deepfakes are malicious. Some are incredibly helpful.

Think of deepfake technology like a digital impersonator trained on thousands of examples. If you’ve ever watched a skilled impressionist mimic a celebrity’s voice or mannerisms, deepfake AI does the same thing — except it studies facial movements, vocal patterns, lighting, and micro-expressions at a scale humans can’t match.

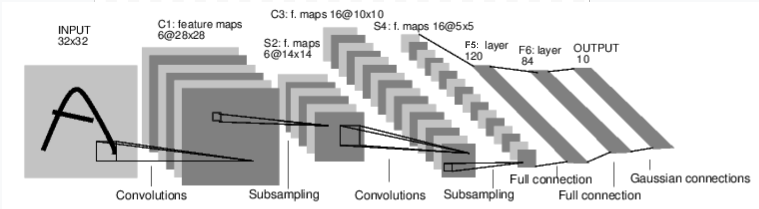

Most modern deepfakes rely on techniques such as:

- Generative Adversarial Networks (GANs)

- Autoencoders

- Diffusion models

- Voice cloning neural networks

These systems learn patterns from real media and then recreate them with stunning accuracy. The result can be a video of someone saying words they never spoke or a voice recording that sounds eerily authentic.

Where things get complicated is intent. Deepfake technology itself is neutral. The outcome depends entirely on how humans use it.

How deepfake technology works (without the technical headache)

To really understand deepfake technology, imagine teaching a child to mimic you perfectly.

First, the AI studies you. It looks at hundreds or thousands of photos, videos, or voice recordings. It analyzes how your face moves when you smile, how your eyes blink, how your voice rises at the end of a sentence, and even how you pause before speaking.

Next, it practices. The AI generates versions of “you” and compares them to real footage. Another AI model critiques those attempts and points out flaws. This back-and-forth continues until the synthetic version becomes convincing.

In video deepfakes, the process typically includes:

- Face detection and alignment

- Feature mapping (eyes, nose, mouth, jaw)

- Motion tracking

- Frame-by-frame synthesis

- Post-processing to smooth artifacts

For voice deepfakes, the AI:

- Learns vocal tone, pitch, cadence, and accent

- Maps phonemes to sound waves

- Reconstructs speech using text-to-speech models

What’s remarkable is how accessible this has become. Five years ago, creating a convincing deepfake required serious computing power and expertise. Today, browser-based tools can do it in minutes.

That accessibility is both the magic and the danger.

The real-world benefits and legitimate use cases of deepfake technology

Deepfake technology isn’t just about trickery. When used ethically and transparently, it can unlock enormous value across industries.

In entertainment and media, filmmakers use deepfake-like techniques to de-age actors, dub films into multiple languages while preserving lip movements, or resurrect historical figures for documentaries. Studios have quietly adopted these tools to save time and production costs.

In education and training, deepfake technology allows the creation of lifelike instructors who can deliver lessons in multiple languages or personalize content for different learning styles. Medical schools use synthetic patients to train doctors in diagnosis and bedside communication.

Marketing and content creation have also embraced deepfake tools. Brands now create personalized video messages at scale, where a digital presenter addresses each viewer by name. This kind of personalization used to be impossible.

Accessibility is another major benefit. Voice cloning helps people who’ve lost their ability to speak regain a version of their original voice. Language translation deepfakes allow speakers to communicate globally while retaining their natural expression.

Even customer support is changing. AI-powered digital humans can answer questions 24/7 while maintaining consistent tone and appearance.

The key takeaway: deepfake technology becomes powerful when it enhances human capability instead of replacing trust.

The darker side: risks, misuse, and ethical concerns

Now for the uncomfortable part.

Deepfake technology has been used for scams, political manipulation, harassment, and identity theft. Voice deepfakes have fooled employees into wiring money. Fake videos have spread misinformation faster than fact-checkers can respond.

One of the biggest dangers is erosion of trust. When anyone can fake anything, people begin doubting real evidence. This phenomenon, often called the “liar’s dividend,” allows wrongdoers to dismiss genuine recordings as fake.

Common malicious uses include:

- Financial fraud via voice impersonation

- Non-consensual explicit content

- Political misinformation

- Corporate espionage

- Social engineering attacks

The emotional toll is real. Victims of deepfake abuse often struggle to clear their names, even after content is debunked.

This is why ethical guidelines, watermarking, and detection tools matter just as much as creation tools.

A step-by-step guide to creating deepfake content responsibly

If you’re exploring deepfake technology for legitimate purposes, responsibility should be baked into your process from the start.

Step one is consent. Always obtain explicit permission from anyone whose likeness or voice you’re using. This isn’t just ethical — it’s increasingly required by law.

Next, choose the right tool based on your goal. Face swaps, voice cloning, and avatar generation each require different platforms.

Prepare high-quality source material. Clean audio and well-lit video dramatically improve results and reduce uncanny artifacts.

Generate your content in controlled environments. Start with short clips, review frame-by-frame, and test across devices.

Always disclose synthetic media. Transparency builds trust and protects your reputation.

Finally, secure your files. Deepfake assets should be treated like sensitive data to prevent misuse.

Responsible creation isn’t about limiting creativity. It’s about making sure innovation doesn’t come at the cost of integrity.

Deepfake tools: comparisons, pros, cons, and expert picks

The deepfake technology ecosystem is growing fast, and not all tools are created equal.

For video generation, platforms like Synthesia and HeyGen focus on ethical, consent-based avatar creation. They’re ideal for business, training, and marketing.

For voice cloning, tools such as ElevenLabs offer incredibly natural results but require strict safeguards.

Open-source tools provide flexibility but come with higher risk. Without built-in consent systems, responsibility falls entirely on the user.

Free tools are good for experimentation but often lack quality control and legal protections. Paid tools offer better outputs, support, and compliance features.

Detection tools are equally important. Companies like Truepic focus on verifying media authenticity and provenance.

My expert recommendation: if you’re using deepfake technology commercially, choose platforms that prioritize transparency, watermarking, and ethical use. Cutting corners here can cost you far more later.

Common deepfake mistakes — and how to fix them

One of the biggest mistakes people make is underestimating how observant humans are. Even small glitches — unnatural blinking, audio lag, stiff expressions — can shatter believability.

Another common error is poor source material. Grainy videos or noisy audio confuse AI models and produce uncanny results.

Legal oversight is another trap. Using someone’s likeness without proper agreements can lead to lawsuits, takedowns, and permanent reputational damage.

Many creators also forget disclosure. Failing to label synthetic content can destroy trust even if the use was harmless.

Fixes are straightforward:

- Invest in quality input data

- Use reputable platforms

- Test with real viewers

- Clearly label synthetic media

- Stay informed about regulations

Deepfake technology rewards patience and ethics far more than shortcuts.

How to detect deepfakes as a viewer or organization

4

Detection is becoming a critical skill.

As a viewer, watch for unnatural facial movements, inconsistent lighting, or mismatched audio. Trust your instincts when something feels “off.”

Organizations should invest in AI-based detection tools and employee training. Simple verification steps, like callback protocols for financial requests, can stop scams cold.

Media literacy is your strongest defense. The more people understand how deepfake technology works, the harder it becomes to weaponize it.

The future of deepfake technology: where we’re headed

Deepfake technology is evolving alongside regulation, ethics, and public awareness.

Expect stronger watermarking standards, cryptographic signatures, and platform-level detection. Governments are introducing laws that require disclosure and penalize malicious use.

At the same time, legitimate applications will expand. Digital humans will become more common in education, healthcare, and global communication.

The future isn’t about stopping deepfake technology. It’s about shaping it responsibly.

Conclusion: understanding deepfake technology is no longer optional

Deepfake technology sits at the intersection of creativity and risk. Ignoring it won’t make it go away. Understanding it gives you power — whether that’s the power to create responsibly, protect yourself, or educate others.

Used well, deepfake technology can humanize communication, expand access, and unlock new forms of storytelling. Used poorly, it can erode trust and cause real harm.

The difference isn’t the technology. It’s us.

If you take one thing away from this guide, let it be this: stay curious, stay ethical, and never stop questioning what you see and hear.

FAQs

Deepfake technology is used in entertainment, education, marketing, accessibility tools, and fraud prevention research.

The technology itself is legal, but misuse such as fraud, harassment, or non-consensual content is illegal in many regions.

Look for unnatural movements, audio mismatches, and verify the source through trusted channels.

Yes, when using consent-based tools, disclosures, and strong security practices.

Yes, but detection tools and authentication standards are improving alongside generation quality.

Many modern tools are no-code and user-friendly.

TECHNOLOGY

Brain Chip Technology: How Human Minds Are Beginning to Interface With Machines

Brain chip technology is no longer a sci-fi trope whispered about in futuristic novels or late-night podcasts. It’s real, it’s advancing quickly, and it’s quietly reshaping how we think about health, ability, communication, and even what it means to be human. If you’ve ever wondered whether humans will truly connect their brains to computers—or whether that future is already here—you’re asking the right question at exactly the right time.

In the first 100 words, let’s be clear: brain chip technology refers to implantable or wearable systems that read, interpret, and sometimes stimulate neural activity to help the brain communicate directly with machines. This technology matters because it sits at the intersection of medicine, neuroscience, artificial intelligence, ethics, and human potential. In this deep-dive guide, you’ll learn how brain chips work, who they help today, where the technology is heading, the benefits and risks involved, and how to separate hype from reality.

Understanding Brain Chip Technology in Simple Terms

At its core, brain chip technology is about translating the language of the brain—electrical signals fired by neurons—into something computers can understand. Think of your brain as an incredibly fast, complex orchestra where billions of neurons communicate through electrical impulses. A brain chip acts like a highly sensitive microphone placed inside that orchestra, listening carefully and translating those signals into digital commands.

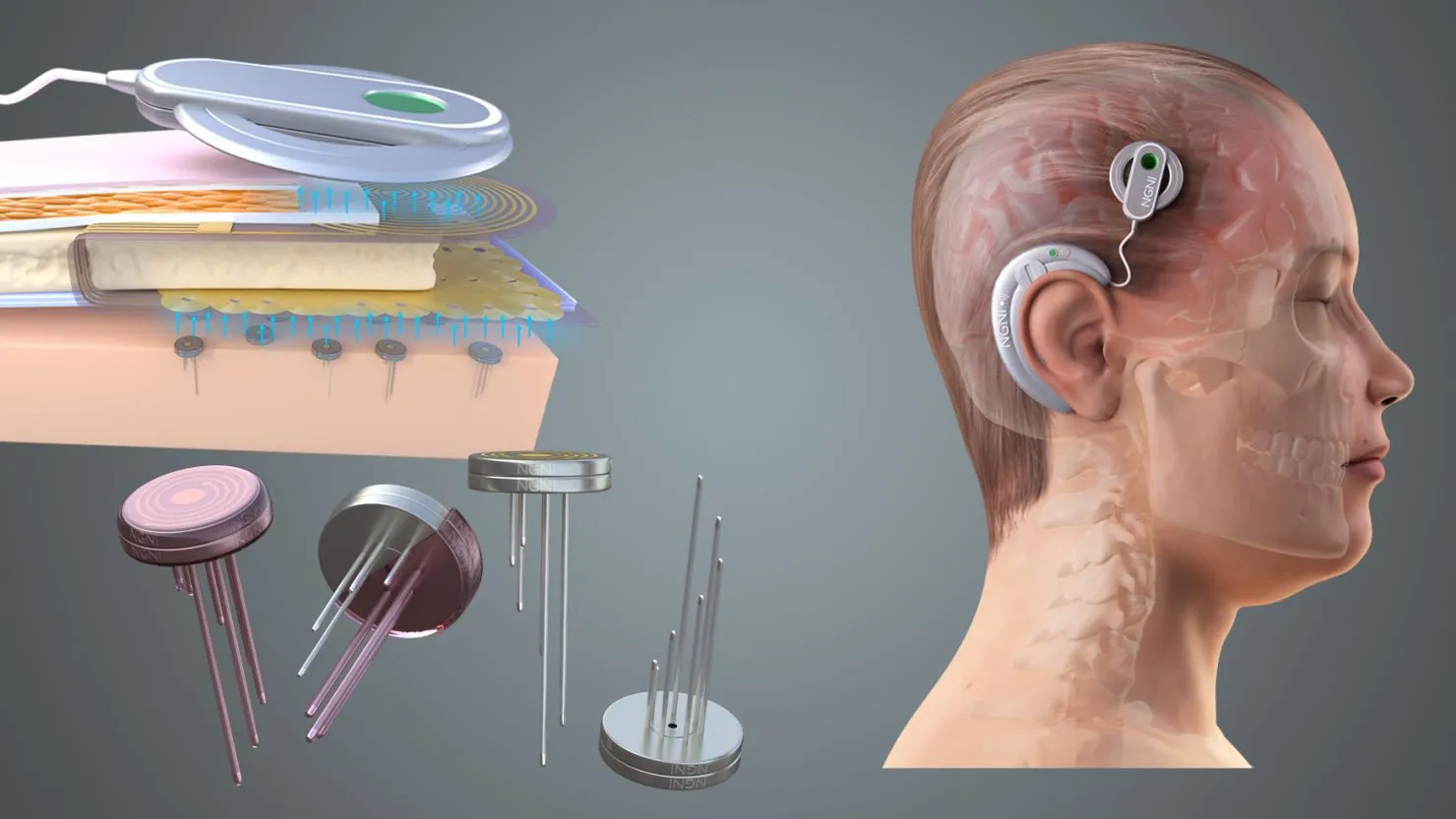

This field is more formally known as brain-computer interfaces (BCIs). Some BCIs are external, using EEG caps that sit on the scalp. Others are invasive, meaning tiny electrodes are surgically implanted into specific regions of the brain. The more closely you listen to neurons, the more accurate the signal becomes, which is why implantable brain chips are such a big focus right now.

To make this relatable, imagine typing without your hands. Instead of pressing keys, you think about moving your fingers, and the brain chip detects those intentions and converts them into text on a screen. That’s not hypothetical—it’s already happening in clinical trials. The magic lies not in reading thoughts like a mind reader, but in detecting patterns associated with intention, movement, or sensation.

The Science Behind Brain Chip Technology (Without the Jargon)

The human brain communicates using electrochemical signals. When neurons fire, they create tiny voltage changes. Brain chips use microelectrodes to detect these changes and feed the data into algorithms trained to recognize patterns.

Modern brain chip systems rely heavily on machine learning. Over time, the system “learns” what specific neural patterns mean for a particular person. This personalization is critical because no two brains are wired exactly alike.

There are three main technical components involved:

Neural sensors that capture brain activity

Signal processors that clean and decode the data

Output systems that translate signals into actions like moving a cursor or activating a prosthetic

Some advanced brain chips also work in reverse. They don’t just read signals—they send electrical stimulation back into the brain. This is how treatments like deep brain stimulation already help patients with Parkinson’s disease. The next generation aims to restore sensation, reduce chronic pain, or even repair damaged neural circuits.

Why Brain Chip Technology Matters More Than Ever

The urgency around brain chip technology isn’t about novelty—it’s about unmet needs. Millions of people worldwide live with paralysis, neurodegenerative diseases, spinal cord injuries, or speech impairments. Traditional medicine can only go so far once neural pathways are damaged.

Brain chips offer a workaround. Instead of repairing broken biological connections, they bypass them. That’s a profound shift in how we approach disability and recovery.

Beyond healthcare, the technology raises serious questions about human augmentation, cognitive enhancement, privacy, and ethics. We’re standing at the same kind of crossroads humanity faced with the internet or smartphones—except this time, the interface is the human brain itself.

Real-World Benefits and Use Cases of Brain Chip Technology

Restoring Movement and Independence

One of the most powerful applications of brain chip technology is helping paralyzed individuals regain control over their environment. People with spinal cord injuries have used brain chips to control robotic arms, wheelchairs, and even their own paralyzed limbs through external stimulators.

The emotional impact of this cannot be overstated. Being able to pick up a cup, type a message, or scratch an itch after years of immobility is life-changing. These aren’t flashy demos; they’re deeply human victories.

Communication for Locked-In Patients

Patients with ALS or brainstem strokes sometimes retain full cognitive ability but lose the power to speak or move. Brain chips allow them to communicate by translating neural signals into text or speech.

In clinical trials conducted by organizations like BrainGate, participants have typed sentences using only their thoughts. For families, this restores not just communication, but dignity and connection.

Treating Neurological Disorders

Brain chips are also being explored for treating epilepsy, depression, OCD, and chronic pain. By monitoring abnormal neural patterns and intervening in real time, these systems could offer more precise treatments than medication alone.

Unlike drugs that affect the entire brain, targeted neural stimulation focuses only on the circuits involved, reducing side effects and improving outcomes.

Future Cognitive Enhancement (With Caveats)

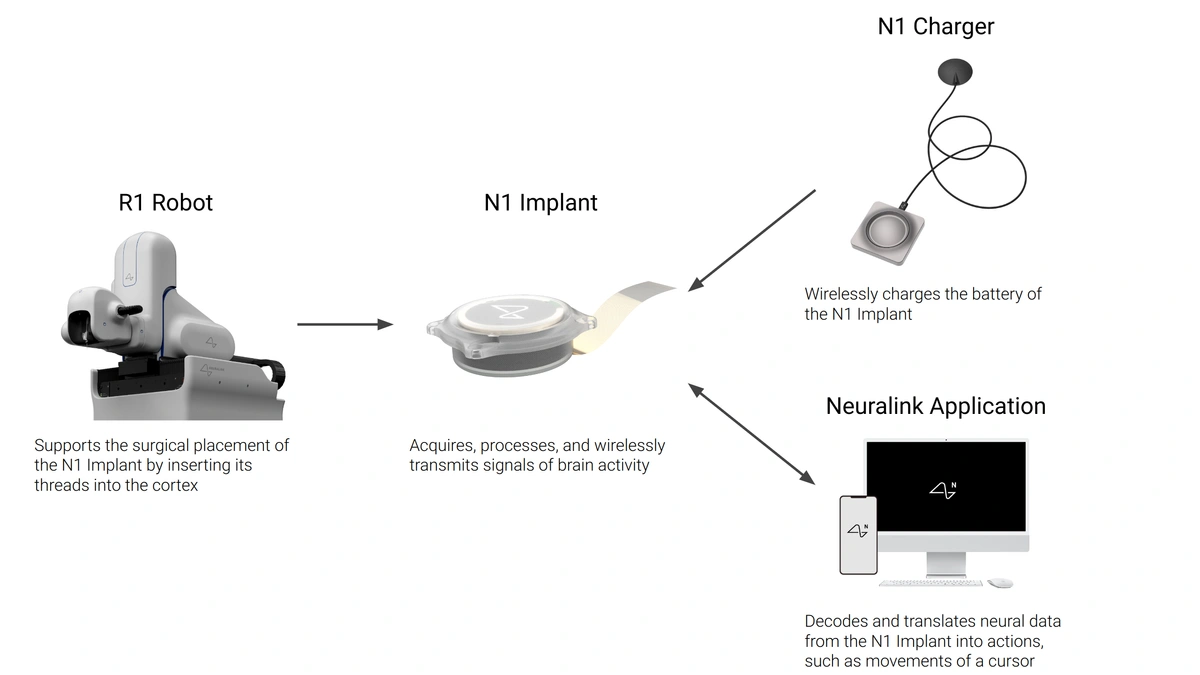

While medical use comes first, many people are curious about enhancement—faster learning, improved memory, or direct brain-to-AI interaction. Companies like Neuralink, founded by Elon Musk, openly discuss long-term goals involving human-AI symbiosis.

It’s important to note that enhancement applications remain speculative and ethically complex. Today’s reality is firmly medical and therapeutic.

A Practical, Step-by-Step Look at How Brain Chip Technology Is Used

Understanding the process helps demystify the technology and separate reality from hype.

The journey typically begins with patient evaluation. Neurologists determine whether a person’s condition could benefit from a brain-computer interface. Not everyone is a candidate, and risks are carefully weighed.

Next comes surgical implantation for invasive systems. Using robotic assistance and brain imaging, surgeons place microelectrodes with extreme precision. The procedure is delicate but increasingly refined.

After implantation, calibration begins. This is where machine learning plays a central role. The system observes neural activity while the user attempts specific actions, gradually learning how to interpret their signals.

Training follows. Users practice controlling devices through thought alone. This stage requires patience, but most users improve rapidly as brain and machine adapt to each other.

Ongoing monitoring and updates ensure safety and performance. In regulated environments, oversight from agencies like the FDA ensures ethical and medical compliance.

Tools, Platforms, and Leading Brain Chip Developers

Several organizations are shaping the present and future of brain chip technology.

Synchron focuses on minimally invasive implants delivered through blood vessels, avoiding open-brain surgery. Their approach reduces risk and speeds recovery.

Blackrock Neurotech provides research-grade neural implants used in many clinical trials worldwide. Their Utah Array has become a standard in neuroscience research.

Academic-industry partnerships continue to drive innovation, blending rigorous science with real-world application. While consumer versions don’t exist yet, medical systems are advancing steadily.

Free vs paid doesn’t quite apply here like it does in software, but the distinction between research access and commercial availability is important. Most current brain chip systems are accessible only through clinical trials.

Common Mistakes and Misconceptions About Brain Chip Technology

One of the biggest mistakes people make is assuming brain chips can read private thoughts. They cannot. These systems decode specific neural patterns related to trained tasks, not abstract ideas or secrets.

Another misconception is that brain chip users lose autonomy or become controlled by machines. In reality, control flows from human to machine, not the other way around. The user remains fully conscious and in charge.

Many also overestimate the speed of development. While progress is impressive, widespread consumer brain chips are not imminent. Regulatory, ethical, and safety hurdles are substantial and necessary.

Finally, ignoring ethical considerations is a serious error. Data privacy, consent, and long-term effects must be addressed responsibly as the technology evolves.

Ethical, Legal, and Social Implications You Should Understand

Brain chip technology forces society to confront new ethical territory. Who owns neural data? How do we protect users from misuse? Could access become unequal?

Regulation is still catching up. Governments, ethicists, and technologists must collaborate to ensure safeguards without stifling innovation. Transparency and public dialogue are essential.

The most responsible developers emphasize medical need first, enhancement later—if ever. This prioritization builds trust and aligns innovation with genuine human benefit.

Where Brain Chip Technology Is Headed Next

The next decade will likely bring smaller implants, wireless power, longer lifespans, and improved signal resolution. Non-invasive and minimally invasive approaches will expand accessibility.

Integration with AI will improve decoding accuracy and personalization. However, meaningful breakthroughs will come from clinical success stories, not flashy announcements.

Brain chip technology won’t replace smartphones overnight, but it may quietly redefine assistive medicine and rehabilitation in ways we’re only beginning to understand.

Conclusion: What Brain Chip Technology Really Means for Humanity

Brain chip technology isn’t about turning people into cyborgs. It’s about restoring lost abilities, unlocking communication, and giving people back parts of their lives once thought gone forever. The true power of this technology lies not in futurism, but in compassion.

As research continues and safeguards mature, brain chips may become one of the most humane uses of advanced technology we’ve ever developed. If you’re curious, cautious, or cautiously optimistic—you’re in good company. The future of brain chip technology will be shaped not just by engineers, but by informed, engaged humans like you.

FAQs

Current systems are tested under strict clinical protocols. While surgery carries risk, safety has improved significantly.

No. They detect trained neural patterns related to specific tasks, not abstract or private thoughts.

People with paralysis, ALS, spinal cord injuries, and certain neurological disorders benefit most today.

Some implants are designed for long-term use, while others can be removed or upgraded.

Not yet. Most access is through clinical trials or specialized medical programs.

TECHNOLOGY

Face Recognition Technology: How It Works, Where It’s Used, and What the Future Holds

Have you ever unlocked your phone without typing a password, walked through an airport without showing your boarding pass, or noticed a camera that seems a little too smart? Behind those everyday moments is face recognition technology, quietly reshaping how we interact with devices, services, and even public spaces.

I still remember the first time I saw face recognition used outside a smartphone. It was at a corporate office where employees simply walked in—no badges, no sign-in desk. The system recognized faces in real time, logging attendance automatically. It felt futuristic… and slightly unsettling. That mix of convenience and concern is exactly why face recognition technology matters today.

This technology is no longer limited to sci-fi movies or government labs. It’s now embedded in smartphones, banking apps, airports, retail stores, healthcare systems, and even classrooms. Businesses love it for security and efficiency. Consumers love it for speed and ease. Regulators, meanwhile, are racing to keep up.

In this guide, I’ll walk you through what face recognition technology really is, how it works under the hood, where it’s being used successfully, and what mistakes to avoid if you’re considering it. I’ll also share practical tools, real-world use cases, ethical considerations, and where this rapidly evolving technology is headed next. Whether you’re a tech enthusiast, business owner, or simply curious, you’ll leave with a clear, balanced understanding.

What Is Face Recognition Technology? (Explained Simply)

At its core, face recognition technology is a biometric system that identifies or verifies a person based on their facial features. Think of it like a digital version of how humans recognize each other—but powered by algorithms instead of memory.

Here’s a simple analogy:

Imagine your brain keeping a mental “map” of someone’s face—eye distance, nose shape, jawline, and overall proportions. Face recognition software does something similar, but mathematically. It converts facial characteristics into data points, creating a unique facial signature often called a faceprint.

The process usually involves three stages:

- Face Detection – The system detects a human face in an image or video.

- Feature Analysis – Key facial landmarks (eyes, nose, mouth, cheekbones) are measured.

- Matching – The extracted data is compared against stored face templates in a database.

What makes modern face recognition powerful is artificial intelligence and deep learning. Older systems struggled with lighting, angles, or facial expressions. Today’s AI-driven models improve with more data, learning to recognize faces even with glasses, masks, facial hair, or aging.

It’s also important to distinguish between face detection and face recognition. Detection simply finds a face in an image. Recognition goes a step further—it identifies who that face belongs to. Many apps use detection only, while security systems rely on full recognition.

Why Face Recognition Technology Matters Today

Face recognition technology sits at the intersection of security, convenience, and identity. In a world where digital fraud, identity theft, and data breaches are rising, traditional passwords are no longer enough. Faces, unlike passwords, can’t be forgotten or easily stolen—at least in theory.

From a business perspective, the value is clear:

- Faster authentication than PINs or cards

- Reduced fraud in banking and payments

- Improved customer experience through personalization

- Automation of manual identity checks

From a societal perspective, the stakes are higher. Face recognition can enhance public safety, but it also raises serious questions about privacy, consent, and surveillance. This dual nature is why governments, tech companies, and civil rights groups are all paying close attention.

The technology isn’t inherently good or bad—it’s how it’s used that matters. Understanding its mechanics and implications is the first step to using it responsibly.

Benefits and Real-World Use Cases of Face Recognition Technology

1. Enhanced Security and Fraud Prevention

One of the strongest advantages of face recognition technology is security. Banks use it to verify users during mobile transactions. Offices use it to control access to restricted areas. Even smartphones rely on facial biometrics to protect sensitive data.

Unlike passwords or access cards, faces are difficult to duplicate. When combined with liveness detection (checking that the face is real and not a photo), systems become significantly harder to fool.

2. Seamless User Experience

Convenience is where face recognition truly shines. No typing. No remembering. No physical contact. Just look at the camera.

Examples include:

- Unlocking phones and laptops

- Contactless payments

- Hotel check-ins without front desks

- Smart homes that recognize residents

For users, this feels almost magical. For businesses, it reduces friction and boosts satisfaction.

3. Attendance, Access, and Workforce Management

Companies and schools use face recognition systems to track attendance automatically. No buddy punching. No fake sign-ins. The system logs time accurately, saving administrative hours and improving accountability.

4. Healthcare and Patient Safety

Hospitals use face recognition to identify patients, access medical records quickly, and prevent identity mix-ups. In emergencies, this can save lives by ensuring the right treatment reaches the right person.

5. Law Enforcement and Public Safety

When used responsibly and legally, face recognition helps identify missing persons, suspects, and victims. However, this use case is also the most controversial due to privacy concerns—highlighting the need for strict oversight.

How Face Recognition Technology Works: Step-by-Step

Let’s break down the technical workflow in plain language.

Step 1: Image or Video Capture

The system captures an image via a camera—this could be a smartphone camera, CCTV feed, or laptop webcam. Quality matters here. Better lighting and resolution improve accuracy.

Step 2: Face Detection

Using computer vision, the system locates a face within the image. It draws a digital boundary around it, ignoring backgrounds or other objects.

Step 3: Facial Feature Extraction

This is the heart of face recognition technology. The software measures distances between facial landmarks:

- Eye spacing

- Nose width

- Jawline shape

- Lip contours

These measurements form a numerical template unique to each individual.

Step 4: Data Encoding

The facial template is converted into a mathematical representation. This data—not the raw image—is usually stored to protect privacy.

Step 5: Matching and Decision

The system compares the new face template against stored templates. If the similarity score crosses a predefined threshold, access is granted or identity confirmed.

Best Practices for Accuracy

- Use high-quality cameras

- Enable liveness detection

- Regularly update facial data

- Combine with other authentication methods for sensitive systems

Tools, Software, and Face Recognition Platforms Compared

When choosing face recognition technology, tools fall into two broad categories: consumer-level and enterprise-grade.

Free and Open-Source Options

Pros:

- Cost-effective

- Customizable

- Good for experimentation

Cons:

- Require technical expertise

- Limited support

- Not always production-ready

Best for developers and researchers testing concepts.

Paid and Enterprise Solutions

Pros:

- High accuracy

- Scalability

- Built-in compliance features

- Customer support

Cons:

- Subscription costs

- Vendor lock-in

Ideal for businesses needing reliability and legal compliance.

What to Look for When Choosing a Tool

- Accuracy across demographics

- Data security and encryption

- Compliance with privacy laws

- Integration with existing systems

There’s no “one-size-fits-all” solution. The best tool depends on your use case, budget, and ethical stance.

Common Mistakes with Face Recognition Technology (and How to Fix Them)

Even powerful technology fails when misused. Here are mistakes I’ve seen repeatedly—and how to avoid them.

Mistake 1: Ignoring Bias and Accuracy Issues

Early face recognition systems performed poorly on certain demographics. This leads to false positives and discrimination.

Fix:

Choose models trained on diverse datasets and test performance regularly.

Mistake 2: Poor Consent and Transparency

Deploying face recognition without informing users damages trust and may violate laws.

Fix:

Always provide clear notices, obtain consent, and explain data usage.

Mistake 3: Overreliance on Face Recognition Alone

No system is 100% accurate.

Fix:

Use multi-factor authentication for high-risk scenarios.

Mistake 4: Weak Data Protection

Storing facial data insecurely is a major risk.

Fix:

Encrypt data, limit retention, and restrict access.

Ethical and Privacy Considerations You Can’t Ignore

Face recognition technology deals with one of the most personal identifiers we have—our face. Misuse can lead to mass surveillance, loss of anonymity, and abuse of power.

Key ethical principles include:

- Consent – People should know and agree.

- Purpose Limitation – Use data only for stated goals.

- Accountability – Systems must be auditable.

- Fairness – Avoid bias and discrimination.

Governments worldwide are debating regulations, and businesses that ignore ethics risk backlash, fines, and reputational damage.

The Future of Face Recognition Technology

The next generation of face recognition technology will be smarter, more private, and more regulated.

Trends to watch:

- On-device processing (no cloud storage)

- Improved accuracy with masks and aging

- Stronger privacy-preserving techniques

- Integration with augmented reality and smart cities

The future isn’t about more surveillance—it’s about smarter, safer identity verification.

Conclusion

Face recognition technology is no longer a novelty—it’s a foundational tool shaping security, convenience, and digital identity. When implemented responsibly, it saves time, reduces fraud, and improves experiences. When misused, it threatens privacy and trust.

The key takeaway? Use face recognition with intention. Understand how it works, choose the right tools, respect ethics, and stay informed as regulations evolve.

If you’re exploring this technology for business or personal use, start small, test carefully, and always put people first. Technology should serve humans—not the other way around.

FAQs

Yes, when implemented with strong security, encryption, and consent-based policies.

Top systems exceed 99% accuracy in controlled conditions, though real-world performance varies.

Modern AI models can, though accuracy may slightly decrease.

It depends on your country and use case. Always check local laws.

Like any data, yes—if poorly protected. Strong security minimizes risk.

TECHNOLOGY

Machine Learning: The Complete Practical Guide for Beginners, Professionals, and Curious Minds

Machine learning is everywhere — quietly shaping the content you see, the products you buy, the routes you drive, and even the emails you open. Yet for something so deeply embedded in modern life, machine learning often feels mysterious, overhyped, or intimidating.

I’ve spent years writing, researching, and working around technology-driven topics, and one pattern keeps repeating: people use machine learning every day but rarely understand it. This guide exists to change that.

Whether you’re a beginner trying to grasp the basics, a business owner exploring smarter automation, or a professional deciding whether machine learning is worth learning seriously, this article will walk you through everything — clearly, honestly, and without buzzwords.

By the end, you’ll understand what machine learning really is, how it works step by step, where it delivers real value, which tools actually matter, and how to avoid the most common mistakes that derail beginners.

Machine Learning Explained in Plain English

At its core, machine learning is about teaching computers to learn from experience instead of following rigid instructions.

Traditionally, software worked like this:

“If X happens, do Y.”

Every scenario had to be anticipated and hard-coded by a human.

Machine learning flips that model.

Instead of explicitly programming every rule, we give machines data, let them identify patterns, and allow them to improve their decisions over time. Think of it less like writing instructions and more like training a new employee. You don’t explain every possible situation — you give examples, feedback, and time.

A relatable analogy is email spam filtering. No engineer manually writes rules for every spam message. Instead, the system learns from millions of examples labeled “spam” or “not spam.” Over time, it becomes surprisingly good at recognizing patterns humans never explicitly defined.

This ability to learn from data is what separates machine learning from traditional programming and makes it so powerful in messy, real-world situations.

Why Machine Learning Matters More Than Ever

Machine learning isn’t a trend — it’s a response to scale. Humans simply cannot manually analyze the massive volumes of data generated today. Every click, purchase, search, sensor, and swipe produces information.

Machine learning thrives in environments where:

- Data is large and complex

- Patterns are subtle or non-obvious

- Decisions need to improve continuously

That’s why companies using machine learning don’t just work faster — they often work smarter. They predict demand more accurately, detect fraud earlier, personalize experiences better, and automate decisions that once required entire teams.

What’s changed in recent years isn’t the idea itself — it’s accessibility. Open-source tools, cloud platforms, and online education have lowered the barrier so dramatically that individuals can now build models that once required corporate research labs.

Types of Machine Learning You Should Actually Understand

Not all machine learning works the same way. Understanding the main categories helps you choose the right approach and avoid confusion.

Supervised Learning

This is the most common type. The model learns from labeled data — meaning each input already has a known correct output.

Examples include:

- Predicting house prices using past sales data

- Email spam detection

- Credit risk assessment

You’re essentially showing the model examples and saying, “Here’s the right answer — learn how to get there.”

Unsupervised Learning

Here, the data has no labels. The model explores patterns on its own.

Common use cases include:

- Customer segmentation

- Market basket analysis

- Anomaly detection

Unsupervised learning is useful when you don’t yet know what you’re looking for — only that structure exists somewhere in the data.

Reinforcement Learning

This approach learns through trial and error. The model takes actions, receives feedback (rewards or penalties), and adjusts accordingly.

You’ll see this in:

- Game-playing AI

- Robotics

- Autonomous systems

It’s powerful but complex, and not usually where beginners should start.

Real-World Benefits and Use Cases of Machine Learning

Machine learning delivers value when it solves real problems — not when it’s used just because it sounds impressive.

In business, machine learning improves decision-making by identifying trends humans might miss. Retailers forecast demand more accurately, reducing overstock and shortages. Banks detect fraud in real time instead of after damage is done.

In healthcare, models assist doctors by analyzing medical images, predicting disease risks, and optimizing treatment plans. These systems don’t replace professionals — they amplify their expertise.

Content platforms use machine learning to recommend videos, articles, and products that align with user preferences. This isn’t magic — it’s pattern recognition at scale.

For individuals, machine learning powers:

- Voice assistants

- Language translation

- Photo organization

- Smart search results

The biggest benefit isn’t automation alone — it’s continuous improvement. The more data the system sees, the better it gets.

A Practical Step-by-Step Guide to Machine Learning

If you’re serious about learning machine learning, this is the roadmap that actually works.

Step 1: Understand the Problem

Before touching code, define the question clearly. Are you predicting a number, classifying categories, or discovering patterns? Most failures begin with vague goals.

Step 2: Collect and Prepare Data

Data quality matters more than algorithms. Clean messy entries, handle missing values, remove duplicates, and normalize formats. This step often takes more time than model training — and that’s normal.

Step 3: Choose the Right Model

Simple models often outperform complex ones when data is limited. Start with basics before chasing deep learning.

Step 4: Train and Evaluate

Split data into training and testing sets. Measure accuracy using appropriate metrics — not just raw percentages.

Step 5: Improve Iteratively

Refine features, tune parameters, and test variations. Machine learning is iterative, not linear.

Step 6: Deploy and Monitor

A model isn’t “done” when it works once. Real-world data changes. Monitor performance and retrain when accuracy drops.

Tools and Platforms That Actually Matter

You don’t need dozens of tools — you need the right ones.

For beginners and professionals alike, these stand out:

- scikit-learn: Ideal for classical machine learning. Simple, powerful, and well-documented.

- TensorFlow: Excellent for large-scale and production-ready models.

- PyTorch: Preferred by researchers for flexibility and clarity.

Free tools are more than enough to learn and deploy real projects. Paid platforms mainly help with scalability and infrastructure, not intelligence.

If you’re learning visually, this YouTube explainer offers a clear foundation:

Common Machine Learning Mistakes (and How to Avoid Them)

Many beginners fail not because machine learning is hard — but because they approach it incorrectly.

One frequent mistake is chasing advanced algorithms before understanding fundamentals. Fancy models won’t fix poor data or unclear objectives.

Another is overfitting — building a model that performs well on training data but fails in real-world scenarios. Always validate with unseen data.

Ignoring domain knowledge is equally dangerous. Models don’t understand context unless you guide them through features and constraints.

Finally, many underestimate maintenance. A model that works today may fail tomorrow if data patterns change. Monitoring is non-negotiable.

The Human Side of Machine Learning

Despite the hype, machine learning doesn’t replace human judgment — it depends on it.

Humans decide what data matters, what success looks like, and what ethical boundaries must be respected. Bias in data leads to biased models, and no algorithm fixes that automatically.

The most successful machine learning systems are collaborative — combining computational speed with human insight. That balance is where real value lives.

Conclusion: Is Machine Learning Worth Learning?

Absolutely — but not for the reasons hype suggests.

Machine learning isn’t about becoming an overnight AI wizard. It’s about learning how data-driven systems think, how decisions scale, and how technology adapts over time.

Whether you’re building products, analyzing data, writing about technology, or simply trying to stay relevant in a rapidly evolving world, understanding machine learning gives you leverage.

Start small. Stay curious. Focus on fundamentals. The rest compounds naturally.

If you found this guide helpful, explore one tool mentioned above or try applying machine learning thinking to a real problem you already care about.

FAQs

Machine learning allows computers to learn from data and improve decisions without explicit programming.

The basics are approachable. Mastery takes time, practice, and real-world application.

Some math helps, but practical understanding matters more at the beginning.

Machine learning is a subset of artificial intelligence, focused on data-driven learning.

You can grasp fundamentals in weeks, but proficiency develops over months of practice.

-

HEALTH7 months ago

HEALTH7 months agoChildren’s Flonase Sensimist Allergy Relief: Review

-

BLOG6 months ago

BLOG6 months agoDiscovering The Calamariere: A Hidden Gem Of Coastal Cuisine

-

TECHNOLOGY6 months ago

TECHNOLOGY6 months agoHow to Build a Mobile App with Garage2Global: From Idea to Launch in 2025

-

TECHNOLOGY2 months ago

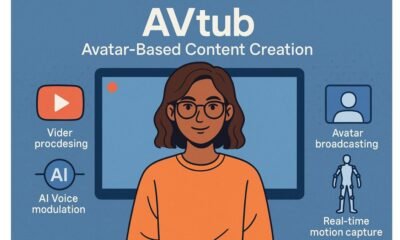

TECHNOLOGY2 months agoAVtub: The Rise of Avatar-Driven Content in the Digital Age

-

BLOG7 months ago

BLOG7 months agoWarmables Keep Your Lunch Warm ~ Lunch Box Kit Review {Back To School Guide}

-

HEALTH7 months ago

HEALTH7 months agoTurkey Neck Fixes That Don’t Need Surgery

-

BLOG6 months ago

BLOG6 months agoKeyword Optimization by Garage2Global — The Ultimate 2025 Guide

-

EDUCATION2 months ago

EDUCATION2 months agoHCOOCH CH2 H2O: Structure, Properties, Applications, and Safety of Hydroxyethyl Formate