TECHNOLOGY

Pentagon Big Tech Tesla Cybertrucks: What’s Really Going On Behind the Headlines?

What does the Pentagon have in common with Big Tech—and why are Tesla Cybertrucks suddenly part of the same conversation?

At first glance, the idea of pentagon big tech tesla cybertrucks sounds like a strange mashup of defense briefings, Silicon Valley boardrooms, and futuristic stainless-steel pickup trucks. But dig a little deeper, and you’ll find this isn’t clickbait or conspiracy—it’s a real, unfolding story about how modern warfare, government procurement, and commercial innovation are colliding faster than most people realize.

In recent years, the Pentagon has leaned heavily on Big Tech for everything from cloud computing and AI to autonomous systems and logistics. At the same time, Tesla’s Cybertruck—designed as a consumer vehicle—has sparked serious conversations about military, law enforcement, and government use. That overlap raises important questions: Why would the Department of Defense be interested in commercial tech like Cybertrucks? What role does Big Tech play in shaping modern defense strategy? And what does this mean for taxpayers, innovation, and national security?

In this article, we’ll unpack the pentagon big tech tesla cybertrucks connection from the ground up. You’ll learn what’s driving this convergence, how it works in practice, the benefits and risks involved, and what to watch next as the lines between military and commercial technology continue to blur.

Understanding the Pentagon–Big Tech–Tesla Cybertrucks Connection

To understand the pentagon big tech tesla cybertrucks story, it helps to think of modern defense as less about tanks and more about systems. Today’s military strength depends just as much on software, data, and rapid manufacturing as it does on traditional weapons.

The Pentagon increasingly relies on Big Tech companies like Amazon, Microsoft, Google, and Palantir to provide cloud infrastructure, artificial intelligence, and data analytics. These tools power everything from battlefield simulations to logistics planning and cyber defense. The Department of Defense simply can’t build this technology fast enough on its own, so it buys and adapts what the private sector already does well.

Tesla enters the picture because it represents the bleeding edge of commercial innovation. The Cybertruck, in particular, isn’t just a quirky electric pickup. It’s a rolling computer on wheels, built with:

- A hardened stainless-steel exoskeleton

- Advanced driver-assistance and autonomous capabilities

- Over-the-air software updates

- Electric drivetrains with fewer mechanical failure points

From a Pentagon perspective, that combination is intriguing. Militaries have always repurposed civilian vehicles—from Jeeps to Humvees to commercial drones. The Cybertruck fits into a long tradition of adapting consumer tech for defense and government use, especially for logistics, base operations, and non-combat roles.

So when people talk about pentagon big tech tesla cybertrucks, they’re really talking about a broader shift: the military sourcing innovation from Silicon Valley and electric vehicle manufacturers instead of relying solely on traditional defense contractors.

Why the Pentagon Is Turning to Big Tech and Commercial Vehicles

The Pentagon’s growing relationship with Big Tech and interest in vehicles like Tesla Cybertrucks isn’t accidental—it’s driven by necessity.

Traditional defense procurement is notoriously slow. Designing a military vehicle from scratch can take a decade or more. By the time it’s deployed, the technology is often outdated. Big Tech, on the other hand, operates on rapid iteration cycles. Software updates roll out weekly. Hardware improves annually. That speed is incredibly attractive to defense planners.

Commercial electric vehicles offer specific advantages. Electric drivetrains are quieter, which matters in reconnaissance and base security scenarios. They also reduce dependence on fuel supply lines, which have historically been one of the most vulnerable parts of military operations. Charging infrastructure can be deployed on bases more securely than fuel convoys can operate in hostile environments.

The Cybertruck’s design also aligns with certain military needs:

- Its exoskeleton may provide passive durability without added armor

- Flat panels simplify repairs and modifications

- Integrated software allows for custom defense applications

Big Tech supports all of this by providing the data platforms, AI tools, and cybersecurity frameworks needed to manage fleets of connected vehicles. In that sense, Tesla Cybertrucks are just one node in a much larger digital ecosystem the Pentagon is building.

Benefits and Real-World Use Cases

When you look past the headlines, the pentagon big tech tesla cybertrucks relationship offers several practical benefits—especially for non-combat and support roles.

One major use case is base operations. Military bases function like small cities, requiring transportation for personnel, equipment, and supplies. Cybertrucks could serve as:

- Maintenance vehicles

- Security patrol trucks

- Utility transport for tools and materials

Their electric nature lowers long-term operating costs, and over-the-air updates reduce downtime. A vehicle that improves over time instead of degrading is a powerful concept for government fleets.

Another scenario is disaster response. The Pentagon often assists during natural disasters, where fuel availability can be limited. Electric trucks charged via generators or renewable sources could operate independently of disrupted fuel supply chains. Tesla’s experience with battery storage and energy management becomes especially relevant here.

Law enforcement and federal agencies are also watching closely. While a fully militarized Cybertruck isn’t likely in the near term, lightly modified versions for border security, infrastructure protection, or emergency response are well within the realm of possibility.

For Big Tech, the benefit is obvious: large, stable government contracts and real-world testing at scale. For Tesla, it’s validation that commercial EVs are robust enough for demanding environments. And for the Pentagon, it’s access to cutting-edge innovation without reinventing the wheel.

Step-by-Step: How Commercial Tech Becomes Military-Grade

The process behind pentagon big tech tesla cybertrucks isn’t as simple as buying a truck and painting it green. There’s a structured pathway that commercial technology follows before it’s used by the military.

The first step is evaluation. The Pentagon conducts testing to determine whether a commercial product meets baseline requirements for durability, cybersecurity, and reliability. For vehicles like the Cybertruck, this includes stress testing, software audits, and environmental trials.

Next comes modification. Very few commercial products are used as-is. The military may add secure communications, remove consumer features that pose security risks, or integrate the vehicle into existing command-and-control systems powered by Big Tech platforms.

The third step is limited deployment. Small pilot programs allow the Pentagon to gather real-world data. This is where Big Tech analytics shine, tracking performance, maintenance needs, and cost savings over time.

Finally, if the results are positive, the technology may be scaled across bases or agencies. Importantly, this doesn’t always mean combat use. Many technologies remain confined to logistics, training, or support roles, where they still deliver enormous value.

Throughout this process, cybersecurity is paramount. Big Tech partners help harden systems against intrusion, while Tesla’s software architecture allows for controlled, secure updates—something traditional military vehicles struggle with.

Tools, Comparisons, and Expert Recommendations

When evaluating pentagon big tech tesla cybertrucks against traditional alternatives, the differences are stark.

Traditional military vehicles are rugged but expensive and slow to evolve. A standard tactical vehicle may cost hundreds of thousands of dollars and require specialized maintenance. Cybertrucks, even with modifications, are significantly cheaper on a per-unit basis and benefit from mass production.

From a software standpoint, Big Tech platforms outperform legacy defense systems in scalability and usability. Cloud-based tools enable real-time monitoring of vehicle fleets, predictive maintenance, and faster decision-making.

That said, there are trade-offs. Commercial vehicles aren’t designed for front-line combat. They lack armor, weapon mounts, and redundancy systems required in hostile environments. Experts generally recommend using Cybertrucks for:

- Base and facility operations

- Training environments

- Disaster and humanitarian missions

For organizations considering similar approaches, best practices include:

- Starting with limited pilot programs

- Prioritizing cybersecurity integration

- Clearly defining non-combat use cases

The consensus among defense analysts is that commercial tech shouldn’t replace traditional military hardware—but it can complement it in powerful ways.

Common Mistakes and How to Avoid Them

One common mistake in discussions about pentagon big tech tesla cybertrucks is assuming this represents full militarization of consumer vehicles. In reality, most interest lies in support roles, not battlefield dominance.

Another error is underestimating cybersecurity risks. Connected vehicles are vulnerable if not properly secured. This is why Big Tech’s role is so critical—without enterprise-grade security, these systems would be unacceptable for government use.

There’s also the risk of overhyping cost savings. While electric vehicles reduce fuel and maintenance costs, upfront integration and training expenses can be significant. Realistic budgeting is essential.

To avoid these pitfalls, agencies should:

- Clearly define mission requirements

- Invest early in cybersecurity

- Measure success with data, not headlines

Done correctly, commercial tech integration can enhance capabilities without compromising security or readiness.

Conclusion

The pentagon big tech tesla cybertrucks conversation isn’t about sci-fi warfare or flashy gimmicks. It’s about a fundamental shift in how governments source and deploy technology. The Pentagon is no longer just a customer of traditional defense contractors—it’s a sophisticated integrator of commercial innovation.

Big Tech provides the digital backbone. Tesla offers a glimpse into what modern, software-driven vehicles can look like. Together, they illustrate a future where military and civilian technology evolve side by side, each influencing the other.

For readers, the takeaway is simple: this trend is real, it’s accelerating, and it will shape everything from national defense to emergency response. Whether you’re a technologist, policymaker, or curious observer, it’s worth paying attention to where these worlds intersect.

If you have thoughts on this convergence—or questions about where it’s heading next—join the conversation and keep exploring the topic.

FAQs

The Pentagon is exploring commercial electric vehicles for logistics, base operations, and disaster response due to their efficiency, software integration, and lower operating costs.

No confirmed combat deployments exist. Interest is primarily focused on non-combat and support roles.

Big Tech provides cloud computing, AI, data analytics, and cybersecurity platforms that enable connected vehicle fleets and modern defense operations.

In many support roles, yes. Commercial vehicles cost less upfront and are cheaper to maintain, though integration costs must be considered.

Any connected system carries risk, which is why cybersecurity audits and hardened software are mandatory before government use

TECHNOLOGY

Artificial Intelligence for Artists: A Practical, Human Guide to Creating Smarter (Not Soulless) Art

If you’re an artist, chances are you’ve felt it—that mix of curiosity and unease when someone mentions artificial intelligence for artists. Maybe a friend sent you an AI-generated image that looked too good. Maybe a client asked if you could “use AI to speed things up.” Or maybe you’ve quietly wondered whether these tools could actually help you break a creative block instead of replacing what you do.

I’ve been in creative rooms where AI felt like a gimmick—and in others where it genuinely unlocked new possibilities. The truth lives in the middle. Used thoughtfully, artificial intelligence for artists isn’t about cutting corners or losing your voice. It’s about expanding your toolkit, protecting your time, and giving your imagination more room to breathe.

This guide is written like a conversation between working creatives. We’ll unpack what AI really is (without jargon), how artists are using it right now, where it shines, where it fails, and how to integrate it without sacrificing authorship, ethics, or originality. By the end, you’ll know exactly how to decide if, when, and how AI belongs in your practice.

Artificial Intelligence for Artists: What It Actually Means (Without the Tech Headache)

Artificial intelligence for artists sounds intimidating because it’s often explained from a programmer’s perspective. Let’s flip that.

Think of AI less like a robot artist and more like a hyper-fast creative assistant. It doesn’t feel inspiration. It recognizes patterns—millions of them—then predicts what might come next based on your input. That’s it.

When you type a prompt into an image generator, AI isn’t “imagining” a scene. It’s statistically assembling visual elements based on what it has learned from existing images. When you use AI to upscale a sketch or generate color palettes, it’s not making aesthetic judgments—it’s applying learned probabilities.

For artists, this matters because AI is reactive, not proactive. You remain the director. The stronger your creative intent, the better the results.

In practice, artificial intelligence for artists usually falls into four categories:

- Ideation support: mood boards, rough concepts, composition variations

- Production assistance: upscaling, background generation, cleanup

- Workflow automation: tagging, resizing, exporting, batch tasks

- Exploration tools: style mixing, surreal experimentation, rapid iteration

The biggest misconception is that AI replaces skill. In reality, it amplifies skill. A trained artist gets far more value from AI than a beginner because they can spot errors, refine prompts, and shape outcomes with intention.

Why Artificial Intelligence for Artists Matters Right Now

We’re at a turning point similar to when digital art tablets first appeared. Traditional artists worried about losing craft. Digital artists worried about oversaturation. Then the dust settled—and tools became normal.

Artificial intelligence for artists matters today because:

- Creative timelines are shrinking

- Clients expect faster iteration

- Content demand has exploded across platforms

- Burnout is real, especially for freelancers

AI doesn’t remove the need for taste, judgment, or experience. It removes busywork. And in creative careers, time saved often means better art—not lazier art.

More importantly, artists who understand AI can:

- Set boundaries with clients

- Price work based on value, not hours

- Experiment without fear of wasting materials

- Stay competitive without racing to the bottom

Ignoring AI doesn’t protect your craft. Understanding it does.

Benefits and Real-World Use Cases of Artificial Intelligence for Artists

Let’s move past theory and talk about how artificial intelligence for artists is actually being used in studios, home offices, and freelance workflows.

Concept Artists and Illustrators

AI is frequently used at the sketch stage. Instead of staring at a blank canvas, artists generate rough compositions, lighting ideas, or environment concepts. These outputs aren’t final—they’re springboards.

A concept artist I worked with described AI as “visual brainstorming at warp speed.” He still painted everything himself, but he started with better questions.

Fine Artists and Mixed Media Creators

Painters and sculptors use AI to test compositions before committing materials. Photographers experiment with surreal backdrops. Installation artists map spatial ideas quickly.

AI doesn’t replace the final piece—it informs it.

Graphic Designers and Brand Artists

Designers use AI for:

- Rapid layout variations

- Background extensions

- Mockups for client approval

This reduces revision fatigue and keeps creative energy focused on strategy and storytelling.

Digital Creators and Social Artists

Artists producing content regularly—thumbnails, posters, album art—use AI to maintain consistency without burnout. AI handles repetitive variations so humans focus on narrative and emotion.

The common thread? Artificial intelligence for artists works best when it supports decision-making, not when it makes decisions for you.

A Step-by-Step Guide to Using Artificial Intelligence for Artists (Without Losing Control)

Step 1: Define Your Creative Intent First

Never open an AI tool without a goal. Are you exploring mood? Composition? Color? Texture? The clearer your intent, the more useful AI becomes.

Write a one-sentence brief like:

“I’m exploring moody lighting for a cyberpunk alley scene.”

This keeps AI outputs aligned with your vision instead of distracting you.

Step 2: Choose the Right Tool for the Job

Not all AI tools are interchangeable. Some are better for photorealism, others for illustration, others for design cleanup. We’ll compare tools in detail shortly.

Step 3: Learn Prompting Like a Visual Language

Prompting isn’t cheating—it’s communication. Artists who understand visual terminology get better results.

Instead of:

“Cool fantasy character”

Try:

“Painterly fantasy portrait, soft rim lighting, muted color palette, expressive brush strokes, shallow depth of field”

Your art education matters here.

Step 4: Iterate, Don’t Settle

The first output is rarely the best. Use AI like sketch paper. Generate variations, compare, refine.

Step 5: Take Back Control in Post-Processing

The most professional results come when artists repaint, redraw, or collage AI outputs. This is where authorship becomes clear—and defensible.

Artificial Intelligence for Artists: Tools, Comparisons, and Honest Recommendations

Midjourney

Best for expressive, painterly visuals and fast ideation. Incredible aesthetic range, but limited control unless you’re experienced with prompts.

Pros: Beautiful outputs, strong community

Cons: Less precise, subscription-based

Stable Diffusion

Ideal for artists who want control. You can train custom styles and work locally.

Pros: Customization, open-source flexibility

Cons: Steeper learning curve

Adobe Firefly

Designed with artists and licensing in mind. Integrates seamlessly with Photoshop and Illustrator.

Pros: Commercially safe, intuitive

Cons: Less experimental

Free vs Paid Tools

Free tools are great for exploration. Paid tools save time and offer reliability. Professionals usually blend both.

The best setup isn’t one tool—it’s a workflow.

Common Mistakes Artists Make with AI (and How to Fix Them)

The biggest mistake? Letting AI lead instead of follow.

Many artists:

- Skip concept development

- Accept first outputs

- Overuse trending styles

- Ignore ethical considerations

Fixes are simple but intentional:

- Always sketch or write first

- Use AI outputs as references, not finals

- Develop a recognizable personal style

- Understand usage rights and attribution

Artificial intelligence for artists rewards discernment. Taste is your competitive edge.

Ethics, Authorship, and Staying True to Your Voice

This conversation matters. Artists worry about originality—and rightly so.

Ethical use means:

- Being transparent when required

- Avoiding direct imitation of living artists’ styles

- Adding substantial human transformation

- Respecting platform licensing rules

AI doesn’t erase authorship if your creative decisions dominate the process. The line isn’t tool usage—it’s intent and transformation.

The Future of Artificial Intelligence for Artists (and Why Humans Still Matter)

AI will get faster. Outputs will get cleaner. But meaning, context, and emotional resonance remain human domains.

Audiences don’t connect with perfection. They connect with perspective.

Artists who thrive won’t be those who reject AI—or blindly adopt it—but those who use it deliberately, ethically, and creatively.

If you want a solid visual walkthrough of AI-assisted art workflows, this YouTube breakdown is genuinely helpful for artists:

https://www.youtube.com/watch?v=2v5R4wEJbLQ

Conclusion: Artificial Intelligence for Artists Is a Tool, Not a Threat

Artificial intelligence for artists isn’t about replacing creativity—it’s about reclaiming it. When used with intention, AI frees you from repetitive labor and gives you space to think, feel, and experiment.

The artists who benefit most aren’t the fastest or loudest. They’re the ones who stay curious, grounded, and human.

Try one tool. Test one workflow. Keep your standards high. And remember: the art still begins—and ends—with you.

FAQs

No. It’s a tool. Authorship depends on how much creative control and transformation you apply.

Yes, depending on the platform’s licensing terms and your level of human contribution.

It replaces repetitive tasks, not taste, storytelling, or originality.

Purely AI-generated work often isn’t. Human-transformed work usually is.

Visual literacy, art fundamentals, and clear creative direction.

TECHNOLOGY

Brain Chip Technology: How Human Minds Are Beginning to Interface With Machines

Brain chip technology is no longer a sci-fi trope whispered about in futuristic novels or late-night podcasts. It’s real, it’s advancing quickly, and it’s quietly reshaping how we think about health, ability, communication, and even what it means to be human. If you’ve ever wondered whether humans will truly connect their brains to computers—or whether that future is already here—you’re asking the right question at exactly the right time.

In the first 100 words, let’s be clear: brain chip technology refers to implantable or wearable systems that read, interpret, and sometimes stimulate neural activity to help the brain communicate directly with machines. This technology matters because it sits at the intersection of medicine, neuroscience, artificial intelligence, ethics, and human potential. In this deep-dive guide, you’ll learn how brain chips work, who they help today, where the technology is heading, the benefits and risks involved, and how to separate hype from reality.

Understanding Brain Chip Technology in Simple Terms

At its core, brain chip technology is about translating the language of the brain—electrical signals fired by neurons—into something computers can understand. Think of your brain as an incredibly fast, complex orchestra where billions of neurons communicate through electrical impulses. A brain chip acts like a highly sensitive microphone placed inside that orchestra, listening carefully and translating those signals into digital commands.

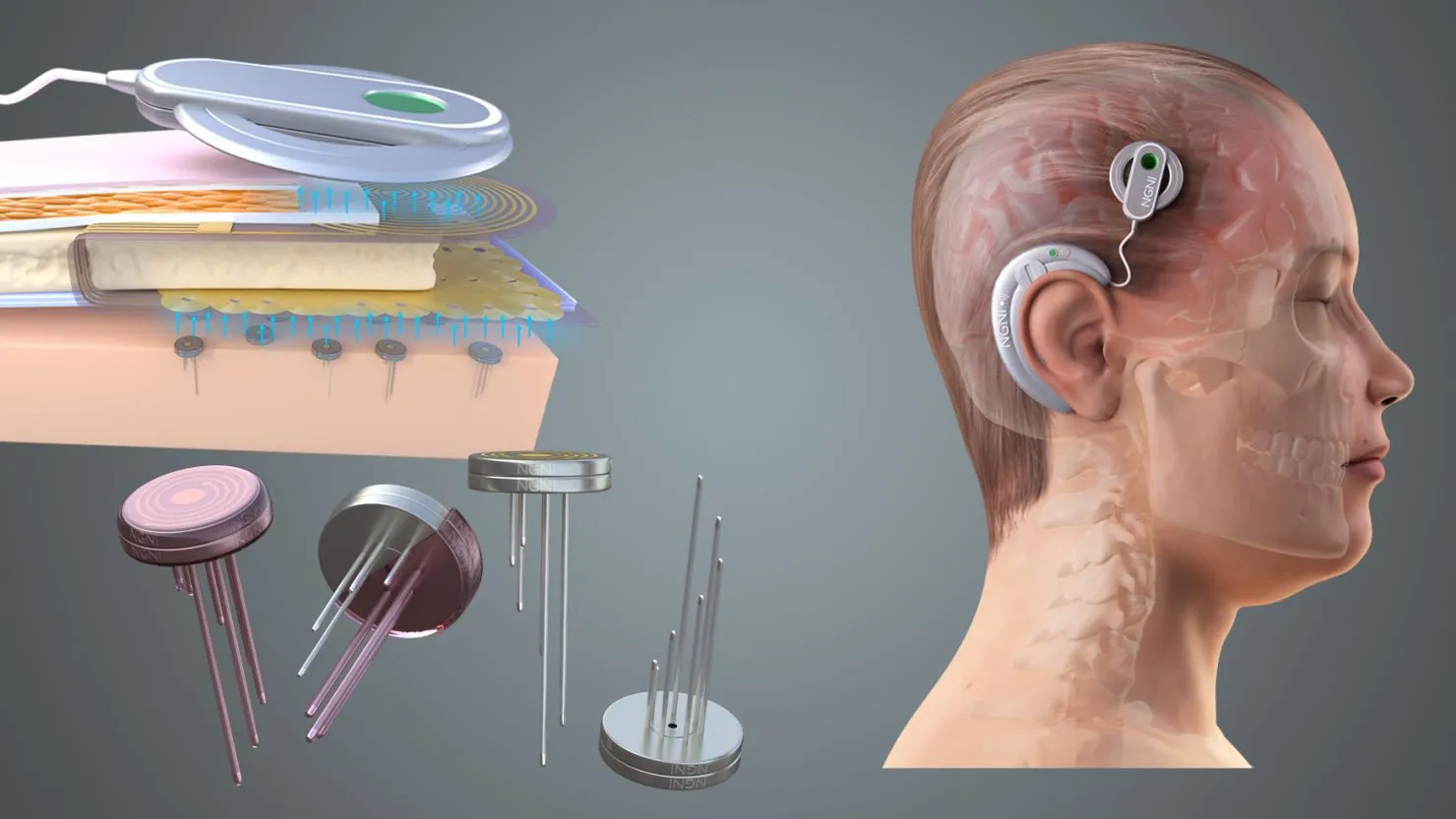

This field is more formally known as brain-computer interfaces (BCIs). Some BCIs are external, using EEG caps that sit on the scalp. Others are invasive, meaning tiny electrodes are surgically implanted into specific regions of the brain. The more closely you listen to neurons, the more accurate the signal becomes, which is why implantable brain chips are such a big focus right now.

To make this relatable, imagine typing without your hands. Instead of pressing keys, you think about moving your fingers, and the brain chip detects those intentions and converts them into text on a screen. That’s not hypothetical—it’s already happening in clinical trials. The magic lies not in reading thoughts like a mind reader, but in detecting patterns associated with intention, movement, or sensation.

The Science Behind Brain Chip Technology (Without the Jargon)

The human brain communicates using electrochemical signals. When neurons fire, they create tiny voltage changes. Brain chips use microelectrodes to detect these changes and feed the data into algorithms trained to recognize patterns.

Modern brain chip systems rely heavily on machine learning. Over time, the system “learns” what specific neural patterns mean for a particular person. This personalization is critical because no two brains are wired exactly alike.

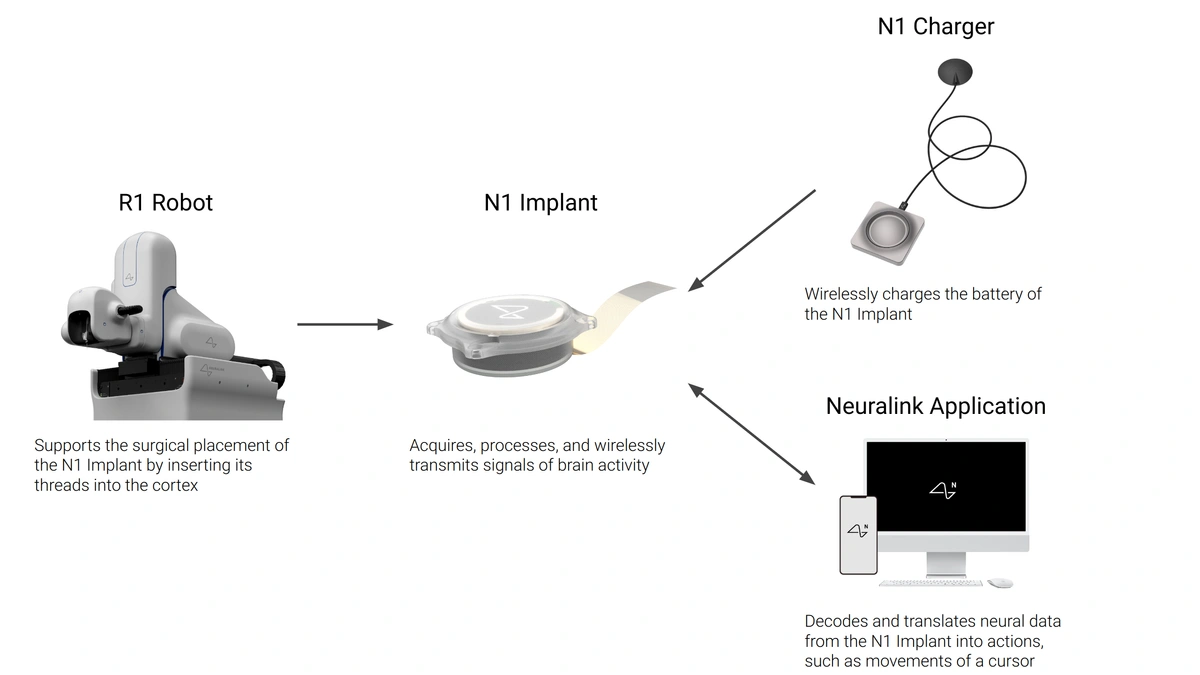

There are three main technical components involved:

Neural sensors that capture brain activity

Signal processors that clean and decode the data

Output systems that translate signals into actions like moving a cursor or activating a prosthetic

Some advanced brain chips also work in reverse. They don’t just read signals—they send electrical stimulation back into the brain. This is how treatments like deep brain stimulation already help patients with Parkinson’s disease. The next generation aims to restore sensation, reduce chronic pain, or even repair damaged neural circuits.

Why Brain Chip Technology Matters More Than Ever

The urgency around brain chip technology isn’t about novelty—it’s about unmet needs. Millions of people worldwide live with paralysis, neurodegenerative diseases, spinal cord injuries, or speech impairments. Traditional medicine can only go so far once neural pathways are damaged.

Brain chips offer a workaround. Instead of repairing broken biological connections, they bypass them. That’s a profound shift in how we approach disability and recovery.

Beyond healthcare, the technology raises serious questions about human augmentation, cognitive enhancement, privacy, and ethics. We’re standing at the same kind of crossroads humanity faced with the internet or smartphones—except this time, the interface is the human brain itself.

Real-World Benefits and Use Cases of Brain Chip Technology

Restoring Movement and Independence

One of the most powerful applications of brain chip technology is helping paralyzed individuals regain control over their environment. People with spinal cord injuries have used brain chips to control robotic arms, wheelchairs, and even their own paralyzed limbs through external stimulators.

The emotional impact of this cannot be overstated. Being able to pick up a cup, type a message, or scratch an itch after years of immobility is life-changing. These aren’t flashy demos; they’re deeply human victories.

Communication for Locked-In Patients

Patients with ALS or brainstem strokes sometimes retain full cognitive ability but lose the power to speak or move. Brain chips allow them to communicate by translating neural signals into text or speech.

In clinical trials conducted by organizations like BrainGate, participants have typed sentences using only their thoughts. For families, this restores not just communication, but dignity and connection.

Treating Neurological Disorders

Brain chips are also being explored for treating epilepsy, depression, OCD, and chronic pain. By monitoring abnormal neural patterns and intervening in real time, these systems could offer more precise treatments than medication alone.

Unlike drugs that affect the entire brain, targeted neural stimulation focuses only on the circuits involved, reducing side effects and improving outcomes.

Future Cognitive Enhancement (With Caveats)

While medical use comes first, many people are curious about enhancement—faster learning, improved memory, or direct brain-to-AI interaction. Companies like Neuralink, founded by Elon Musk, openly discuss long-term goals involving human-AI symbiosis.

It’s important to note that enhancement applications remain speculative and ethically complex. Today’s reality is firmly medical and therapeutic.

A Practical, Step-by-Step Look at How Brain Chip Technology Is Used

Understanding the process helps demystify the technology and separate reality from hype.

The journey typically begins with patient evaluation. Neurologists determine whether a person’s condition could benefit from a brain-computer interface. Not everyone is a candidate, and risks are carefully weighed.

Next comes surgical implantation for invasive systems. Using robotic assistance and brain imaging, surgeons place microelectrodes with extreme precision. The procedure is delicate but increasingly refined.

After implantation, calibration begins. This is where machine learning plays a central role. The system observes neural activity while the user attempts specific actions, gradually learning how to interpret their signals.

Training follows. Users practice controlling devices through thought alone. This stage requires patience, but most users improve rapidly as brain and machine adapt to each other.

Ongoing monitoring and updates ensure safety and performance. In regulated environments, oversight from agencies like the FDA ensures ethical and medical compliance.

Tools, Platforms, and Leading Brain Chip Developers

Several organizations are shaping the present and future of brain chip technology.

Synchron focuses on minimally invasive implants delivered through blood vessels, avoiding open-brain surgery. Their approach reduces risk and speeds recovery.

Blackrock Neurotech provides research-grade neural implants used in many clinical trials worldwide. Their Utah Array has become a standard in neuroscience research.

Academic-industry partnerships continue to drive innovation, blending rigorous science with real-world application. While consumer versions don’t exist yet, medical systems are advancing steadily.

Free vs paid doesn’t quite apply here like it does in software, but the distinction between research access and commercial availability is important. Most current brain chip systems are accessible only through clinical trials.

Common Mistakes and Misconceptions About Brain Chip Technology

One of the biggest mistakes people make is assuming brain chips can read private thoughts. They cannot. These systems decode specific neural patterns related to trained tasks, not abstract ideas or secrets.

Another misconception is that brain chip users lose autonomy or become controlled by machines. In reality, control flows from human to machine, not the other way around. The user remains fully conscious and in charge.

Many also overestimate the speed of development. While progress is impressive, widespread consumer brain chips are not imminent. Regulatory, ethical, and safety hurdles are substantial and necessary.

Finally, ignoring ethical considerations is a serious error. Data privacy, consent, and long-term effects must be addressed responsibly as the technology evolves.

Ethical, Legal, and Social Implications You Should Understand

Brain chip technology forces society to confront new ethical territory. Who owns neural data? How do we protect users from misuse? Could access become unequal?

Regulation is still catching up. Governments, ethicists, and technologists must collaborate to ensure safeguards without stifling innovation. Transparency and public dialogue are essential.

The most responsible developers emphasize medical need first, enhancement later—if ever. This prioritization builds trust and aligns innovation with genuine human benefit.

Where Brain Chip Technology Is Headed Next

The next decade will likely bring smaller implants, wireless power, longer lifespans, and improved signal resolution. Non-invasive and minimally invasive approaches will expand accessibility.

Integration with AI will improve decoding accuracy and personalization. However, meaningful breakthroughs will come from clinical success stories, not flashy announcements.

Brain chip technology won’t replace smartphones overnight, but it may quietly redefine assistive medicine and rehabilitation in ways we’re only beginning to understand.

Conclusion: What Brain Chip Technology Really Means for Humanity

Brain chip technology isn’t about turning people into cyborgs. It’s about restoring lost abilities, unlocking communication, and giving people back parts of their lives once thought gone forever. The true power of this technology lies not in futurism, but in compassion.

As research continues and safeguards mature, brain chips may become one of the most humane uses of advanced technology we’ve ever developed. If you’re curious, cautious, or cautiously optimistic—you’re in good company. The future of brain chip technology will be shaped not just by engineers, but by informed, engaged humans like you.

FAQs

Current systems are tested under strict clinical protocols. While surgery carries risk, safety has improved significantly.

No. They detect trained neural patterns related to specific tasks, not abstract or private thoughts.

People with paralysis, ALS, spinal cord injuries, and certain neurological disorders benefit most today.

Some implants are designed for long-term use, while others can be removed or upgraded.

Not yet. Most access is through clinical trials or specialized medical programs.

TECHNOLOGY

Face Recognition Technology: How It Works, Where It’s Used, and What the Future Holds

Have you ever unlocked your phone without typing a password, walked through an airport without showing your boarding pass, or noticed a camera that seems a little too smart? Behind those everyday moments is face recognition technology, quietly reshaping how we interact with devices, services, and even public spaces.

I still remember the first time I saw face recognition used outside a smartphone. It was at a corporate office where employees simply walked in—no badges, no sign-in desk. The system recognized faces in real time, logging attendance automatically. It felt futuristic… and slightly unsettling. That mix of convenience and concern is exactly why face recognition technology matters today.

This technology is no longer limited to sci-fi movies or government labs. It’s now embedded in smartphones, banking apps, airports, retail stores, healthcare systems, and even classrooms. Businesses love it for security and efficiency. Consumers love it for speed and ease. Regulators, meanwhile, are racing to keep up.

In this guide, I’ll walk you through what face recognition technology really is, how it works under the hood, where it’s being used successfully, and what mistakes to avoid if you’re considering it. I’ll also share practical tools, real-world use cases, ethical considerations, and where this rapidly evolving technology is headed next. Whether you’re a tech enthusiast, business owner, or simply curious, you’ll leave with a clear, balanced understanding.

What Is Face Recognition Technology? (Explained Simply)

At its core, face recognition technology is a biometric system that identifies or verifies a person based on their facial features. Think of it like a digital version of how humans recognize each other—but powered by algorithms instead of memory.

Here’s a simple analogy:

Imagine your brain keeping a mental “map” of someone’s face—eye distance, nose shape, jawline, and overall proportions. Face recognition software does something similar, but mathematically. It converts facial characteristics into data points, creating a unique facial signature often called a faceprint.

The process usually involves three stages:

- Face Detection – The system detects a human face in an image or video.

- Feature Analysis – Key facial landmarks (eyes, nose, mouth, cheekbones) are measured.

- Matching – The extracted data is compared against stored face templates in a database.

What makes modern face recognition powerful is artificial intelligence and deep learning. Older systems struggled with lighting, angles, or facial expressions. Today’s AI-driven models improve with more data, learning to recognize faces even with glasses, masks, facial hair, or aging.

It’s also important to distinguish between face detection and face recognition. Detection simply finds a face in an image. Recognition goes a step further—it identifies who that face belongs to. Many apps use detection only, while security systems rely on full recognition.

Why Face Recognition Technology Matters Today

Face recognition technology sits at the intersection of security, convenience, and identity. In a world where digital fraud, identity theft, and data breaches are rising, traditional passwords are no longer enough. Faces, unlike passwords, can’t be forgotten or easily stolen—at least in theory.

From a business perspective, the value is clear:

- Faster authentication than PINs or cards

- Reduced fraud in banking and payments

- Improved customer experience through personalization

- Automation of manual identity checks

From a societal perspective, the stakes are higher. Face recognition can enhance public safety, but it also raises serious questions about privacy, consent, and surveillance. This dual nature is why governments, tech companies, and civil rights groups are all paying close attention.

The technology isn’t inherently good or bad—it’s how it’s used that matters. Understanding its mechanics and implications is the first step to using it responsibly.

Benefits and Real-World Use Cases of Face Recognition Technology

1. Enhanced Security and Fraud Prevention

One of the strongest advantages of face recognition technology is security. Banks use it to verify users during mobile transactions. Offices use it to control access to restricted areas. Even smartphones rely on facial biometrics to protect sensitive data.

Unlike passwords or access cards, faces are difficult to duplicate. When combined with liveness detection (checking that the face is real and not a photo), systems become significantly harder to fool.

2. Seamless User Experience

Convenience is where face recognition truly shines. No typing. No remembering. No physical contact. Just look at the camera.

Examples include:

- Unlocking phones and laptops

- Contactless payments

- Hotel check-ins without front desks

- Smart homes that recognize residents

For users, this feels almost magical. For businesses, it reduces friction and boosts satisfaction.

3. Attendance, Access, and Workforce Management

Companies and schools use face recognition systems to track attendance automatically. No buddy punching. No fake sign-ins. The system logs time accurately, saving administrative hours and improving accountability.

4. Healthcare and Patient Safety

Hospitals use face recognition to identify patients, access medical records quickly, and prevent identity mix-ups. In emergencies, this can save lives by ensuring the right treatment reaches the right person.

5. Law Enforcement and Public Safety

When used responsibly and legally, face recognition helps identify missing persons, suspects, and victims. However, this use case is also the most controversial due to privacy concerns—highlighting the need for strict oversight.

How Face Recognition Technology Works: Step-by-Step

Let’s break down the technical workflow in plain language.

Step 1: Image or Video Capture

The system captures an image via a camera—this could be a smartphone camera, CCTV feed, or laptop webcam. Quality matters here. Better lighting and resolution improve accuracy.

Step 2: Face Detection

Using computer vision, the system locates a face within the image. It draws a digital boundary around it, ignoring backgrounds or other objects.

Step 3: Facial Feature Extraction

This is the heart of face recognition technology. The software measures distances between facial landmarks:

- Eye spacing

- Nose width

- Jawline shape

- Lip contours

These measurements form a numerical template unique to each individual.

Step 4: Data Encoding

The facial template is converted into a mathematical representation. This data—not the raw image—is usually stored to protect privacy.

Step 5: Matching and Decision

The system compares the new face template against stored templates. If the similarity score crosses a predefined threshold, access is granted or identity confirmed.

Best Practices for Accuracy

- Use high-quality cameras

- Enable liveness detection

- Regularly update facial data

- Combine with other authentication methods for sensitive systems

Tools, Software, and Face Recognition Platforms Compared

When choosing face recognition technology, tools fall into two broad categories: consumer-level and enterprise-grade.

Free and Open-Source Options

Pros:

- Cost-effective

- Customizable

- Good for experimentation

Cons:

- Require technical expertise

- Limited support

- Not always production-ready

Best for developers and researchers testing concepts.

Paid and Enterprise Solutions

Pros:

- High accuracy

- Scalability

- Built-in compliance features

- Customer support

Cons:

- Subscription costs

- Vendor lock-in

Ideal for businesses needing reliability and legal compliance.

What to Look for When Choosing a Tool

- Accuracy across demographics

- Data security and encryption

- Compliance with privacy laws

- Integration with existing systems

There’s no “one-size-fits-all” solution. The best tool depends on your use case, budget, and ethical stance.

Common Mistakes with Face Recognition Technology (and How to Fix Them)

Even powerful technology fails when misused. Here are mistakes I’ve seen repeatedly—and how to avoid them.

Mistake 1: Ignoring Bias and Accuracy Issues

Early face recognition systems performed poorly on certain demographics. This leads to false positives and discrimination.

Fix:

Choose models trained on diverse datasets and test performance regularly.

Mistake 2: Poor Consent and Transparency

Deploying face recognition without informing users damages trust and may violate laws.

Fix:

Always provide clear notices, obtain consent, and explain data usage.

Mistake 3: Overreliance on Face Recognition Alone

No system is 100% accurate.

Fix:

Use multi-factor authentication for high-risk scenarios.

Mistake 4: Weak Data Protection

Storing facial data insecurely is a major risk.

Fix:

Encrypt data, limit retention, and restrict access.

Ethical and Privacy Considerations You Can’t Ignore

Face recognition technology deals with one of the most personal identifiers we have—our face. Misuse can lead to mass surveillance, loss of anonymity, and abuse of power.

Key ethical principles include:

- Consent – People should know and agree.

- Purpose Limitation – Use data only for stated goals.

- Accountability – Systems must be auditable.

- Fairness – Avoid bias and discrimination.

Governments worldwide are debating regulations, and businesses that ignore ethics risk backlash, fines, and reputational damage.

The Future of Face Recognition Technology

The next generation of face recognition technology will be smarter, more private, and more regulated.

Trends to watch:

- On-device processing (no cloud storage)

- Improved accuracy with masks and aging

- Stronger privacy-preserving techniques

- Integration with augmented reality and smart cities

The future isn’t about more surveillance—it’s about smarter, safer identity verification.

Conclusion

Face recognition technology is no longer a novelty—it’s a foundational tool shaping security, convenience, and digital identity. When implemented responsibly, it saves time, reduces fraud, and improves experiences. When misused, it threatens privacy and trust.

The key takeaway? Use face recognition with intention. Understand how it works, choose the right tools, respect ethics, and stay informed as regulations evolve.

If you’re exploring this technology for business or personal use, start small, test carefully, and always put people first. Technology should serve humans—not the other way around.

FAQs

Yes, when implemented with strong security, encryption, and consent-based policies.

Top systems exceed 99% accuracy in controlled conditions, though real-world performance varies.

Modern AI models can, though accuracy may slightly decrease.

It depends on your country and use case. Always check local laws.

Like any data, yes—if poorly protected. Strong security minimizes risk.

-

HEALTH7 months ago

HEALTH7 months agoChildren’s Flonase Sensimist Allergy Relief: Review

-

BLOG6 months ago

BLOG6 months agoDiscovering The Calamariere: A Hidden Gem Of Coastal Cuisine

-

TECHNOLOGY6 months ago

TECHNOLOGY6 months agoHow to Build a Mobile App with Garage2Global: From Idea to Launch in 2025

-

TECHNOLOGY2 months ago

TECHNOLOGY2 months agoAVtub: The Rise of Avatar-Driven Content in the Digital Age

-

BLOG7 months ago

BLOG7 months agoWarmables Keep Your Lunch Warm ~ Lunch Box Kit Review {Back To School Guide}

-

HEALTH7 months ago

HEALTH7 months agoTurkey Neck Fixes That Don’t Need Surgery

-

BLOG6 months ago

BLOG6 months agoKeyword Optimization by Garage2Global — The Ultimate 2025 Guide

-

EDUCATION2 months ago

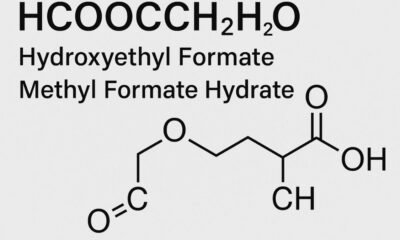

EDUCATION2 months agoHCOOCH CH2 H2O: Structure, Properties, Applications, and Safety of Hydroxyethyl Formate